Data Quality

Data Quality Definition

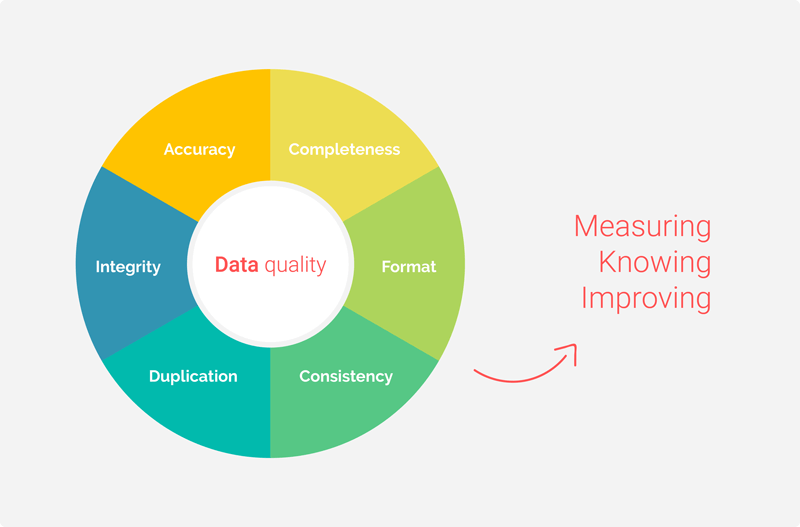

Data quality is the measure of how well suited a data set is to serve its specific purpose. Measures of data quality are based on data quality characteristics such as accuracy, completeness, consistency, validity, uniqueness, and timeliness.

FAQs

What is Data Quality?

Data quality refers to the development and implementation of activities that apply quality management techniques to data in order to ensure the data is fit to serve the specific needs of an organization in a particular context. Data that is deemed fit for its intended purpose is considered high quality data.

Examples of data quality issues include duplicated data, incomplete data, inconsistent data, incorrect data, poorly defined data, poorly organized data, and poor data security.

Data quality assessments are executed by data quality analysts, who assess and interpret each individual data quality metric, aggregate a score for the overall quality of the data, and provide organizations with a percentage to represent the accuracy of their data. A low data quality scorecard indicates poor data quality, which is of low value, is misleading, and can lead to poor decision making that may harm the organization.

Data quality rules are an integral component of data governance, which is the process of developing and establishing a defined, agreed-upon set of rules and standards by which all data across an organization is governed. Effective data governance should harmonize data from various data sources, create and monitor data usage policies, and eliminate inconsistencies and inaccuracies that would otherwise negatively impact data analytics accuracy and regulatory compliance.

Data Quality Dimensions

By which metrics do we measure data quality? There are six main dimensions of data quality: accuracy, completeness, consistency, validity, uniqueness, and timeliness.

- Accuracy: The data should reflect actual, real-world scenarios; the measure of accuracy can be confirmed with a verifiable source.

- Completeness: Completeness is a measure of the data’s ability to effectively deliver all the required values that are available.

- Consistency: Data consistency refers to the uniformity of data as it moves across networks and applications. The same data values stored in difference locations should not conflict with one another.

- Validity: Data should be collected according to defined business rules and parameters, and should conform to the right format and fall within the right range.

- Uniqueness: Uniqueness ensures there are no duplications or overlapping of values across all data sets. Data cleansing and deduplication can help remedy a low uniqueness score.

- Timeliness: Timely data is data that is available when it is required. Data may be updated in real time to ensure that it is readily available and accessible.

How to Improve Data Quality

Data quality measures can be accomplished with data quality tools, which typically provide data quality management capabilities such as:

- Data profiling - The first step in the data quality improvement process is understanding your data. Data profiling is the initial assessment of the current state of the data sets.

- Data Standardization - Disparate data sets are conformed to a common data format.

- Geocoding - The description of a location is transformed into coordinates that conform to U.S. and worldwide geographic standards

- Matching or Linking - Data matching identifies and merges matching pieces of information in big data sets.

- Data Quality Monitoring - Frequent data quality checks are essential. Data quality software in combination with machine learning can automatically detect, report, and correct data variations based on predefined business rules and parameters.

- Batch and Real time - Once the data is initially cleansed, an effective data quality framework should be able to deploy the same rules and processes across all applications and data types at scale.

A good data quality service should provide a data quality dashboard that delivers a flexible user experience, and can be tailored to the specific needs of the data quality stewards and data scientists running data quality oversight. These tools and solutions can provide data quality testing, but cannot fix completely broken and incomplete data. A solid data management framework should be in place to develop, execute, and manage the policies, strategies, and programs that govern, secure, and enhance the value of data collected by an organization.

Data Quality vs Data Integrity

Data quality oversight is just one component of data integrity. Data integrity refers to the process of making data useful to the organization. The four main components of data integrity include:

- Data Integration: data from disparate sources must be seamlessly integrated.

- Data Quality: Data must be complete, unique, valid, timely, consistent, and accurate.

- Location Intelligence: Location insights adds a layer of richness to data and makes it more actionable.

- Data Enrichment: Data enrichment adds a more complete, contextualized view of data by adding data from external sources, such as customer data, business data, location data, etc

Data Quality Assurance vs Data Quality Control

Data quality assurance is the process of identifying and eliminating anomalies by means of data profiling and cleansing. Data quality control is performed both before and after quality assurance, and entails the means by which data usage for an application is controlled. Quality control restricts inputs before quality assurance is performed; then, after quality assurance is performed, information gathered from quality assurance guides the quality control process.

The quality control process is important for detecting duplicates, outliers, errors, and missing information. Some real-life data quality examples include:

- Healthcare: accurate, complete, and unique patient data is essential for facilitating risk management and fast and accurate billing.

- Public Sector: accurate, complete, and consistent data is essential to track the progress of current projects and proposed initiatives.

- Financial Services: Sensitive financial data must be identified and protected, reporting processes must be automated, and regulatory compliances must be remediated.

- Manufacturing: Accurate customer and vendor data must be maintained in order to track spending, reduce operational costs, and create alerts for quality assurance issues and maintenance needs.

Why Data Quality is Important to an Organization

An increasing number of organizations are using data to inform their decisions regarding marketing, product development, communications strategies and more. High quality data can be processed and analyzed quickly, leading to better and faster insights that drive business intelligence efforts and big data analytics.

Good data quality management helps extract greater value from data sets, and contributes to reduced risks and costs, increased efficiency and productivity, more informed decision-making, better audience targeting, more effective marketing campaigns, better customer relations, and an overall stronger competitive edge.

Poor data quality standards can cloud visibility in operations, making it challenging to meet regulatory compliance; waste time and labor on manually reprocessing inaccurate data; provide a disaggregated view of data, making it difficult to discover valuable customer opportunities; damage brand reputation; and even threaten the safety of the public.

Does HEAVY.AI Offer a Data Quality Solution?

Automated, real-time monitoring is a valuable component in data quality management. HEAVY.AI offers real-time monitoring and analytics for thorough data quality assessments. As the pioneer in accelerated analytics, the HEAVY.AI Data Science Platform is used to find real-time data insights beyond the limits of mainstream analytics tools. See HEAVY.AI 's Complete Introduction to Data Science to learn more about how businesses process big data to detect patterns and uncover critical insights.